![]()

In the latter part of Web Archiving Week (#waweek2017) from Wednesday to Friday, Sawood and I attended the International Internet Preservation Consortium (IIPC) Web Archiving Conference (WAC) 2017, held jointly with the RESAW Conference at the Senate House and British Library Knowledge Center in London. Each of the three days had multiple tracks. Reported here are the presentations I attended.

Prior to the keynote, Jane Winters (@jfwinters) of University of London and Nicholas Taylor (@nullhandle) welcomed the crowd with admiration toward the Senate House venue. Leah Lievrouw (@Leah53) from UCLA then began the keynote. In her talk, she walked through the evolution of the Internet as a medium to access information prior to and since the Web.

With reservation toward the "Web 3.0" term, Leah described a new era in the shift from documents to conversations, to big data. With a focus toward the conference, Leah described the social science and cultural break down as it has applied to each Web era.

After the keynote, two concurrent presentation tracks proceeded. I attended a track where Jefferson Bailey (@jefferson_bail) presented "Advancing access and interface for research use of web archives". First citing an updated metric of the Internet Archive's holdings (see Ian's tweet below), Jefferson provided a an update on some contemporary holdings and collections by IA inclusive of some of the details on his GifCities project (introduced with IA's 20th anniversary, see our celebration), which provides searchable access to the the archive's holdings of the animated GIFs that once resided on Geocities.com.

In addition to this, Jefferson also highlighted the beta features of the Wayback Machine, inclusive of anchor text-based search algorithm, MIME-type breakdown, and much more. He also described some other available APIs inclusive of one built on top of WAT files, a metadata format derived from WARC.

Through recent efforts by IA for their anniversary, they also had put together a collection of military PowerPoint slide decks.

Following Jefferson, Niels Brügger (@NielsBr) lead a panel consisting of a subset of authors from the first issue of his journal, "Internet Histories". Marc Weber stated that the journal had been in the works for a while. When he initially told people he was looking at the history of the Web in the 1990s, people were puzzled. He went on to compare the Internet to be in its Victorian era as evolved from 170 years of the telephone and 60 years of being connected through the medium. Of the vast history of the Internet we have preserved relatively little. He finished with noting that we need to treat history and preservation as something that should be done quickly, as we cannot go back later to find the materials if they are no preserved.

Steve Jones of University of Illinois at Chicago spoke second about the Programmed Logic for Automatic Teaching Operations (PLATO) system. There were two key interests, he said, in developing for PLATO -- multiplayer games and communication. The original PLATO lab was in a large room and because of laziness, they could not be bothered to walk to each other's desks, so developed the "Talk" system to communicate and save messages so the same message would not have to be communicated twice. PLATO was not designed for lay users but for professionals, he said, but was also used by university and high school students. "You saw changes between developers and community values," he said, "seeing development of affordances in the context of the discourse of the developers that archived a set of discussions." Access to the PLATO system is still available.

Jane Winters presented third on the panel stating that there is a lot of archival content that has seen little research engagement. This may be due to continuing work on digitizing traditional texts but it is hard to engage with the history of the 21st century without engaging with the Web. The absence of metadata is another issue. "Our histories are almost inherently online", she said, "but they only gain any real permanence through preservation in Web archives. That's why humanists and historians really need to engage with them."

The tracks then joined together for lunch and split back into separate sessions, where I attended the presentation, "A temporal exploration of the composition of the UK Government Web Archive". In this presentation they examined the evolution of the UK National Archives (@uknatarchives). This was followed by a presentation by Caroline Nyvang (@caobilbao) of the Royal Danish Library that examined current web referencing practices. Her group proposed the persistent web identifier (PWID) format for referencing Web archives, which was eerily familiar to the URI semantics often used in another protocol.

Andrew (Andy) Jackson (@anjacks0n) then took the stage to discuss the UK Web Archive's (@UKWebArhive) catalog and challenges they have faced while considering the inclusion of Web archive material. He detailed a process, represented by a hierarchical diagram, to describe the sorts of transformations required in going from the data to reports and indexes about the data. In doing so, he also juxtaposed and compared his process with other archival workflows that would be performed in a conventional library catalog architecture.

Following Andy, Nicola Bingham (@NicolaJBingham) discussed curating collections at the UK Web Archive, which has been archiving since 2013, and challenges in determine the boundaries and scope of what should be collected. She encouraged researchers to engage to shape their collections. Their current holdings consist of about 400 terabytes with 11 to 12 billion records, growing 60 to 70 terabytes and 3 billion records per year. Their primary mission is to collect UK web sites under UK TLDs (like .uk, .scot, .cymru, etc). Domains are currently capped at 512 megabytes being preserved but even then other technical limitations exists in capture like proprietary formats, plugins, robots.txt, etc).

When Nicola finished, there was a short break. Following that, I traveled upstairs of the Senate House to the "Data, process, and results" workshop, lead by Emily Maemura (@emilymaemura). She first described three different research projects where each of the researchers were present and asked attendees to break out into groups to discuss the various facets of each project in detail with each researcher. I opted to discuss Frederico Nanni's (@f_nanni) work with him and a group of other attendees. His work consisted of analyzing and resolving issues in the preservation of the web site of the University of Bologna. The site specifies a robots.txt exclusion, which makes the captures inaccessible to the public but through his investigation and efforts, was able to change their local policy to allow for further examination of the captures.

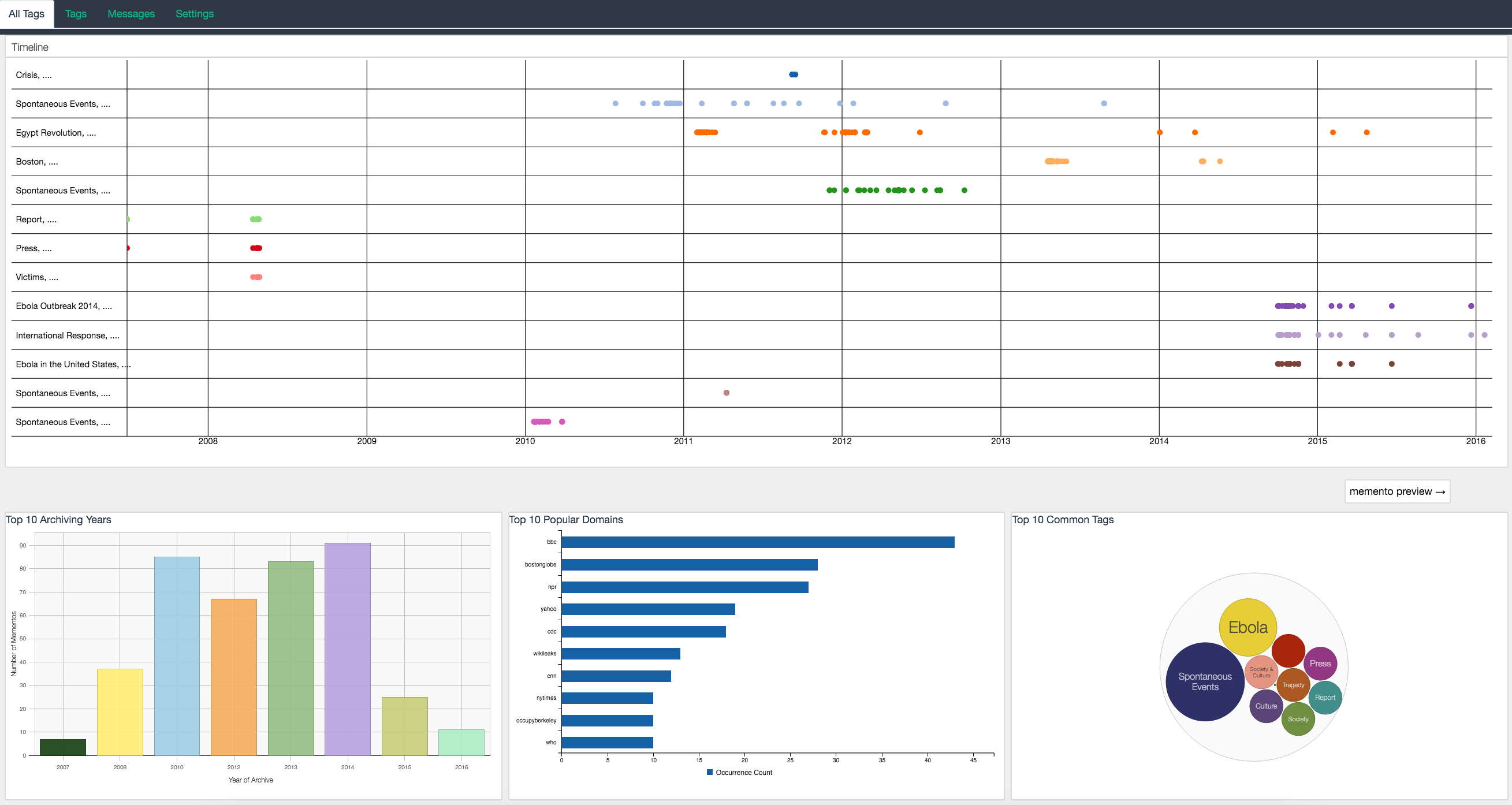

With the completion of the workshop, everyone still in attendance joined back together in the Chancellor's Hall of the Senate House as Ian Milligan (@ianmilligan1) and Matthew Weber (@docmattweber) gave a wrap up of the Archives Unleashed 4.0 Datathon, which had occurred prior to the conference on Monday and Tuesday. Part of the wrap-up was time given to three top ranked projects as determined by judges from the British Library. The group with which I was a part from the Datathon, "Team Intersection" was one of the three, so Jess Ogden (@jessogden) gave a summary presentation. More information on our intersection analysis between multiple data sets can be had on our GitHub.io page. A blog post with more details will be posted here in the coming days detailing our report of the Datathon.

Following the AU 4.0 wrap-up, the audience moved to the British Library Knowledge Center for a panel titled, "Web Archives: truth, lies and politics in the 21st century". I was unable to attend this, opting for further refinement of the two presentations I was to give on the second day of IIPC WAC 2017 (see below).

Day Two

The second day of the conference was split into three concurrent tracks -- two at the Senate House and a third at the British Library Knowledge Center. Given I was slated to give two presentations at the latter (and the venues were about 0.8 miles apart), I opted to attend the sessions at the BL.

Nicholas Taylor opened the session with the scope of the presentations for the day and introduced the first three presenters. First on the bill was Andy Jackson with "Digging document out of the web archives." This initially compared this talk to the one he had given the day prior (see above) relating to the workflows in cataloging items. In the second day's talk, he discussed the process of the Digital ePrints team and the inefficiencies of its manual process for ingesting new content. Based on this process, his team setup a new harvester that watches targets, extracts the document and machine-readable metadata from the targets, and submits it to the catalog. Still though, issues remainder with one being what to identify as the "publication" for e-prints relative to the landing page, assets, and what is actually cataloged. He discussed the need for further experimentation using a variety of workflows to optimize the outcome for quality and to ensure the results are discoverable and accessible and the process remain mostly automated.

Ian Milligan and Nick Ruest (@ruebot) followed Andy with their presentation on making their Canadian web archival data sets easier to use. "We want web archives to be used on page 150 in some book.", they said, reinforcing that they want the archives to inform the insights instead of the subject necessarily being about the archives themselves. They also discussed their extraction and processing workflow from acquiring the data from Internet Archive then using Warcbase and other command-line tools to make the data contained within the archives more accessible. Nick said that since last year when they presented webarchives.ca, they have indexed 10 terabytes representative of over 200 million Solr docs. Ian also discussed derivative datasets they had produced inclusive of domain and URI counts, full-text, and graphs. Making the derivative data sets accessible and usable by researchers is a first step in their work being used on page 150.

Greg Wiedeman (@GregWiedeman) presented third in the technical session by first giving context of his work at the University at Albany (@ualbany) where they are required to preserve state records with no dedicated web archives staff. Some records have paper equivalents like archived copies of their Undergraduate Bulletins while digital versions might consist of Microsoft Word documents corresponding to the paper copies. They are using DACS to describe archives, so questioned whether they should use it for Web archives. On a technical level, he runs a Python script to look at their collection of CDXs, which schedules a crawl which is displayed in their catalog as it completes. "Users need to understand where web archives come from,", he says, "and need provenance to frame their research questions, which will add weight to their research."

A short break commenced, followed by Jefferson Bailey presenting, "Who, what when, where, why, WARC: new tools at the Internet Archive". Initially apologizing for repetition of his prior days presentation, Jefferson went into some technical details of statistics IA has generated, APIs they have to offer, and new interfaces with media queries of a variety of sorts. They also have begun to use Simhash to identify dissimilarity between related documents.

I (Mat Kelly, @machawk1) presented next with "Archive What I See Now – Personal Web Archiving with WARCs". In this presentation I described the advancements we had made to WARCreate, WAIL, and Mink with support from the National Endowment for the Humanities, which we have reported on in a fewpriorblog posts. This presentation served as a wrap-up of new modes added to WARCreate, the evolution of WAIL (See Lipstick or Ham then Electric WAILs and Ham), and integration of Mink (#mink #mink #mink) with local Web archives. Slides below for your viewing pleasure.

Lozana Rossenova (@LozanaRossenova) and Ilya Kreymer (@IlyaKreymer) talked next about Webrecorder and namely about remote browsers. Showing a live example of viewing a web archive with a contemporary browser, technologies that are no longer supported are not replayed as expected, often not being visible at all. Their work allows a user to replicate the original experience of the browser of the day to use the technologies as they were (e.g., Flash/Java applet rendering) for a more accurate portrayal of how the page existed at the time. This is particularly important for replicating art work that is dependent on these technologies to display. Ilya also described their Web Archiving Manifest (WAM) format to allow a collection of Web archives to be used in replaying Web pages with fetches performed at the time of replay. This patching technique allows for more accurate replication of the page at a time.

After Lozana and Ilya finished, the session broke for lunch then reconvened with Fernando Melo (@Fernando___Melo) describing their work at the publicly available Portuguese Web Archive. He showed their work building an image search of their archive using an API to describe Charlie Hebdo-related captures. His co-presenter João Nobre went into further details of the image search API, including the ability to parameterize the search by query string, timestamp, first-capture time, and whether it was "safe". Discussion from the audience afterward asked of the pair what their basis was of a "safe" image.

Nicholas Taylor spoke about recent work with LOCKSS and WASAPI and the re-architecting of the former to open the potential for further integration with other Web archiving technologies and tools. They recently built a service for bibliographic extraction of metadata for Web harvest and file transfer content, which can then be mapped to the DOM tree. They also performed further work on an audit and repair protocol to validate the integrity of distributed copies.

Jefferson again presented to discuss IMLS funded APIs they are developing to test transfers using WASAPI to their partners. His group ran surveys to show that 15-20% of Archive-It users download their WARCs to be stored locally. Their WASAPI Data Transfer API returns a JSON object derived from the set of WARCs transfered inclusive of fields like pagination, count, requested URI, etc. Other fields representative of an Archive-It ID, checksums, and collection information are also present. Naomi Dushay (@ndushay) then showed a video of an overview of their deployment procedure.

After another short break, Jack Cushman& Ilya Kreymer tag-teamed to present, "Thinking like a hacker: Security Issues in Web Capture and Playback". Through a mock dialog, they discussed issues in securing Web archives and a suite of approaches challenging users to compromise a dummy archive. Ilya and Jack also iterated through various security problems that might arise in serving, storing, and accessing Web archives inclusive of stealing cookies, frame highjacking to display a false record, banner spoofing, etc.

Following Ilya and Jack, I (@machawk1, again) and David Dias (@daviddias) presented, "A Collaborative, Secure, and Private InterPlanetary WayBack Web Archiving System using IPFS". This presentation served as follow-on work from the InterPlanetary Wayback (ipwb) project Sawood (@ibnesayeed) had originally built at the Archives Unleashed 1.0 then presented at JCDL 2016, WADL 2016, and TPDL 2016. This work, in collaboration with David of Protocol Labs, who created the InterPlanetary File System (IPFS), was to display some advancements both in IPWB and IPFS. David began with an overview of IPFS, what problem its trying to solve, its system of content addressing, and mechanism to facilitate object permanence. I discussed, as with previous presentations, IPWB's integration of web archive (WARC) files with IPFS using an indexing and replay system that utilize the CDXJ format. One item in David's recent work is bring IPFS to the browsers with his JavaScript port to interface with IPFS from the browsers without the need for a running local IPFS daemon. I had recent introduced encryption and decryption of WARC content to IPWB, allowing for further permanence of archival Web data that may be sensitive in nature. To close the session, we performed a live demo of IPWB consisting of data replication of WARCs from another machine onto the presentation machine.

Following our presentation, Andy Jackson asked for feedback on the sessions and what IIPC can do to support the enthusiasm for open source and collaborative approaches. Discussions commenced among the attendees about how to optimize funding for events, with Jefferson Bailey reiterating the travel eats away at a large amount of the cost for such events. Further discussions were had about why the events we not recorded and on how to remodel the Hackathon events on the likes of other organizations like Mozilla's Global Sprints, the organization of events by the NodeJS community, and sponsoring developers for the Google Summer of Code. The audience then had further discussions on how to followup and communicate once the day was over, inclusive of the IIPC Slack Channel and the IIPC GitHub organization. With that, the second day concluded.

Day 3

By Friday, with my presentations for the trip complete, I now had but one obligation for the conference and the week (other than write my dissertation, of course): to write the blog post you are reading. This was performed while preparing for JCDL 2017 in Toronto the following week (that I attended by proxy, post coming soon). I missed out on the morning sessions, unfortunately, but joined in to catch the end of João Gomes' (@jgomespt) presentation on Arquivo.pt, also presented the prior day. I was saddened to know that I had missed Martin Klein's (@mart1nkle1n) "Uniform Access to Raw Mementos" detailing his, Los Alamos', and ODU's recent collaborative work in extending Memento to support access to unmodified content, among other characteristics that cause a "Raw Memento" to be transformed. WS-DL's own Shawn Jones (@shawnmjones) has blogged about this on numerous occasions, see Mementos in the Raw and Take Two.

The first full session I was able to attend was Abbie Grotke's (@agrotke) presentation, "Oh my, how the archive has grown..." that detailed the progress and size that Library of Congress's Web archive has experienced with minimal growth in staff despite the substantial increase in size of their holdings. While captivated, I came to know via the conference Twitter stream that Martin's third presentation of the day coincided with Abbie's. Sorry, Martin.

I did manage to switch rooms to see Nicholas Taylor discuss using Web archives in legal cases. He stated that in some cases, social media used by courts may only exist in Web archives and that courts now accept archival web captures as evidence. The first instance of using IA's Wayback Machine was in 2004 and its use in courts has been contested many times without avail. The Internet Archive provided affidavit guidance that suggested asking the court to ensure usage of the archive will consider captures as valid evidence. Nicholas alluded to FRE 201 that allows facts to be used as evidence, the basis for which the archive has been used. He also cited various cases where expert testimony of Web archives was used (Khoday v. Symantec Corp., et al.), a defamation case where the IA disclaimer dismissed using it as evidence (Judy Stabile v. Paul Smith Limited et al.), and others. Nicholas also cited WS-DL's own Scott Ainsworth's (@Galsondor) work on Temporal Coherence and how a composite memento may not have existed as displayed.

Following Nicholas, Anastasia Aizman and Matt Phillips (@this_phillips) presented "Instruments for Web archive comparison in Perma.cc". In their work with Harvard's Library Innovation Lab (with which WS-DL's Alex Nwala was recently a Summer fellow), the Perma team has a goal to allow users to cite things on the Web, create WARCs of those things, then be able to organize the captures. Their initial work with the Supreme Court corpus from 1996 to present found that 70% of the references had rotted. Anastasia asked, "How do we know when a web site has changed and how do we know which changed are important?"

They used a variety of ways to determine significant change inclusive of MinHas (via calculating the Jaccard Coefficients), Hamming Distance (via SimHash), and Sequence Matching using a Baseline. As a sample corpus, they took over 2,000 Washington Post articles consisting of over 12,000 resources, examined the SimHash and found big gaps. For MinHash, the distances appeared much closer. In their implementation, they show this to the user on Perma via their banner that provides an option to highlight file changes between sets of documents.

There was a brief break then I attended a session where Peter Webster (@pj_webster) and Chris Fryer (@C_Fryer) discussed their work with the UK Parliamentary Archives. Their recent work consists of capturing official social media feeds of the members of parliament, critical as it captures their relationship with the public. They sought to examine the patterns of use and access by the members and determine the level of understanding of the users of their archive. "Users are hard to find and engage", they said, citing that users were largely ignorant about what web archives are. In a second study, they found that users wanted a mechanism for discovery that mapped to an internal view of how the parliament function. Their studies found many things from web archives that user do not want but a takeaway is that they uncovered some issues in their assumptions and their study raised the profile of the Parliamentary Web Archives among their colleagues.

Emily Maemura and Nicholas Worby presented next with their discussion on origin studies as it relates to web archives, provenance, and trust. They examined decisions made in creating collections in Archive-It by the University of Toronto Libraries, namely the collections involving the Canadian political parties, the Toronto 2015 Pam Am games, and their Global Summitry Archive. From these they determined the three traits of each were that they were long running, a one-time event, and a collaboratively created archive, respectively. For the candidates' sites, they also noticed the implementation of robots.txt exclusions in a supposed attempt to prevent the sites from being archived.

Alexis Antracoli and Jackie Dooley (@minniedw) presented next about their OCLC Research Library Partnership web archive working group. Their examination determined that discoverability was the primary issue for users. Their example of using Archive-It at Princeton but that the fact was not documented was one such issue. Through their study they established use cases for libraries, archives, and researchers. In doing so, they created a data dictionary of characteristics of archives inclusive of 14 data elements like Access/rights, Creator, Description, etc. with many fields having a direct mapping to Dublin Core.

With a short break, the final session then began. I attended the session where Jane Winters (@jfwinters) spoke about increasing the visibility of web archives, asking first, "Who is the audience for Web archives?" then enumerating researchers in the arts, humanities and social sciences. She then described various examples in the press relating to web archives inclusive of Computer Weekly report on Conservatives erasing official records of speeches from IA and Dr. Anat Ben-David's work on getting the .yu TLD restored in IA.

Cynthia Joyce then discussed her work in studying Hurricane Katrina's unsearchable archive. Because New Orleans was not a tech savvy place at the time and it was pre-Twitter, Facebook was young, etc., the personal record was not what it would be were the events to happen today. In her researcher as a citizen, she attempted to identify themes and stories that would have been missed in mainstream media. She said, "On Archive-It, you can find the Katrina collection ranging from resistance to gratitude." Only 8-9 years later did she collect the information, for which many of the writers never expect to be preserved.

For the final presentation of the conference, Colin Post (@werrthe) discussed net-based art and how to go about making them objects of art history. Colin used Alexi Shulgin's "Homework" as an example that uses pop-ups and self-conscious elements that add to the challenge of preservation. In Natalie Bookchin's course, Alexei Shulgin encouraged artists to turn in homework for grading, also doing so himself. His assignment is dominated with popups, something we view in a different light today. "Archives do not capture the performative aspect of the piece", Colin said. Citing oldweb.today provides interesting insights into how the page was captured over time with multiple captures being combined. "When I view the whole piece, it is emulated and artificial; it is disintegrated and inauthentic."

Synopsis

The trip proved very valuable to my research. Not documented in this post was the time between sessions where I was able to speak to some of the presenters about their as it related to my own and even to those that were not presenting in finding an intersection in our respective research.

—

Mat (

@machawk1)