The day before International Internet Preservation Consortium (IIPC) General Assembly 2015 we landed in San Francisco and some delicious Egyptian dishes were waiting for us. Thank you Ahmed, Yasmin, Moustafa, Adrian, and Yusuf for hosting us. It was a great way to spend the evening before IIPC GA and we were delighted to see you people after long time.

Day 1

We (Sawood Alam, Michael L. Nelson, and Herbert Van de Sompel) entered in the conference hall a few minutes after the session was started and Michael Keller from Stanford University Libraries was about to leave the stage after the welcome speech. IIPC Chair Paul Wagner gave brief opening remarks and invited the keynote speaker Vinton Cerf from Google on the stage. The title of the talk was "Digital Vellum: Interacting with Digital Objects Over Centuries" and it was such an informative and delightful talk. He mentioned that the high density low cast storage media is evolving, but the devices to read them might not last long. While mentioning Internet connected picture frames and surf boards he added, we should not forget about the security. To emphasize the security aspect he gave an example that grand parents would love to see their grand children in those picture frames, but will not be very happy if they see something which they do not expect.

Moving on to software emulators he invited Mahadev Satyanarayanan from Carnegie Mellon University to talk about their software archive and emulator called Olive Archive. Satya gave various live demos including the Great American History Machine, ChemCollective (a copy of the website frozen at certain time), PowerPoint 4.0 running in Windows 3.1, and the Oregon Trail, all powered by their virtual machines and running in a web browser. He also talked about the architecture of the Olive Archive and how in future multiple instances can be launched and orchestrated to emulate the subset of the Internet for applications that rely on external services where some instances might run those services independently.

In the QA session someone asked Cerf, how to ask big companies like Google to provide the data about their Crisis Response efforts for archiving after they are done with it? Cerf responded, "you just did." while acknowledging the importance of such data for archival. Here are some tweets that were capturing the moments:

After the break Niels Brügger and Janne Nielsen presented their case study of Danish websphere under the title "Studying a nation's websphere over time: analytical and methodological considerations". Their study covered website content, file types, file sizes, backgrounds, fonts, layout and more importantly the domain names. They also raised the points like size of the ".dk" domain, geolocation, inter and intra domain link network, and if the Danish websites are actually in Danish language? They talked about some crawling challenges. Their domain name analysis tells that only 10% owners own 50% of all the ".dk" domains. I suspected that this result might be due to the private domain name registrations, so I talked to them later and they said, they did not think about private registrations, but they will revisit their analysis.

Andy Jackson from the British Library took the stage with his presentation title "Ten years of the UK web archive: what have we saved?". This case study covers three collections including Open Archive, Legal Deposit Archive, and JISC Historical Archive. These collections store over eight billion resources in over 160TB compressed files and now adding about two billion resources per year. With the help of a nice graph he illustrated that not all ".uk" domains are interlinked, so to maximize the coverage the crawlers need to include other popular TLDs such as ".com". He also presented the analysis of reference rot and content drift utilizing the "ssdeep" fuzzy hash algorithm. Their analysis tells that 50% of resources are unrecognizable or gone after oner year, 60% after two years and 65% after three years.

I had lunch together with Scott Fisher from the California Digital Library. I told him about various digital library and archiving related research projects we are working on at Old Dominion University and he described the holdings of his library and the phalanges they have in upgrading their Wayback to bring Memento support.

After the lunch, keynote speaker of the second session Cathy Marshall from the Texas A&M University took the stage with a very interesting title, "Should we archive Facebook? Why the users are wrong and the NSA is right". She motivated her talk by some interview style dialogues with the primary question, "Do you archive Facebook?" and mostly the answer was "No!". She highlighted that people have developed [wrong] sense that Facebook is taking care of their stuff, so they do not have to. She also noted that people usually do not value their Facebook content or they think it has immediate value, but no archival value. In a large survey she asked should Facebook be archived?, three fourth objected and half of them said "No" unconditionally. In the later part of her talk, she build the story of the marriage of Hal Keeler and Joan Vollmer by stitching various cuttings from local news papers. I am not sure if I could fully appreciate the story due to the cultural difference, but I laughed when everyone else did. Although I did follow her efforts and intention to highlight the need of archiving social media for future historians. And if asks me, is NSA is right? my answer would be, "Yes!, if they do it correctly with all the context included."

Meghan Dougherty from Loyola University Chicago and Annette Markham from Aarhus University presented their talk "Generating granular evidence of lived experience with the Web: archiving everyday digitally lived life". They illustrated how sometimes intentionally or unintentionally people record moments of their life with different media. Among various visual illustrations, I particularly liked the video of a street artist playing with a ring that was posted on Facebook in a very different context than the context it appeared in YouTube. They ended their talk with a hilarious video of Friendster.

Susan Aasman from University of Groningen presented her talk "Everyday saving practices: "small data" and digital heritage strategies". This talk was full of motivation, why people should care about personal archive of their daily life moments. She described how the service Kodak Gallery launched in 2001 with the tag-line, "live forever", and closed in 2012 after transferring billions of images to Shutterfy which was only available for US customers. As a result, people from other countries have lost their photo memories. She also played the Bye Bye Super 8 video of Johan Kramer that was amusing and motivating for personal archiving.

After a short beak Jane Winters from the Institute of Historical Research, Helen Hockx-Yu from the British Library, and Josh Cowls from the Oxford Internet Institute took the stage with their topic "Big UK domain data for Arts and Humanities" also known as BUDDAH project. Jane highlighted the value of archives for research and described the development of a framework to help researchers leverage the archives. She illustrated the interface of the Big Data analysis of BUDDAH project, described the planned output, and various case studies showing what can be done with that data.

Helen Hockx-Yu began her talk "Co-developing access to the UK Web Archive" with reference to the earlier talk by Andy. She noted that a scenario that fits everyone's need is difficult. She described the high level requirements including query building, corpus formation, annotation and cuuration, in-corpus and whole-dataset analysis. She illustrated the SHINE interface that provides features like full-text search, multi-facet filters, query history, and result export.

Finally, Josh Cowls presented his talk about the book "The Web as History: Using Web Archives to Understand the Past and the Present" in which he contributed a chapter. He talked about the four second level domain from ".uk" TLD including ".co.uk", ".org.uk", ".ac.uk", and ".gov.uk" and how they are interlinked. He described the growth of web presence of the BBC and British universities.

IIPC Chair Paul Wagner concluded the day by emphasizing that we have only started scratching the surface. He also noted in his concluding remarks that the context matters.

Day 2

Herbert Van de Sompel from Los Alamos National Laboratory started the second day sessions by talking about "Memento Time Travel". He started with a brief introduction of the Memento followed by a bag full of announcements. For the ease of use in JavaScript clients, Memento now supports JSON responses along with traditional Link format. Memento aggregator now provides responses in two modes including DIY (Do It Yourself) and WDI (We Do It). The service now also allows to export the Time Travel Archive Registry in structured format. Due to the default Memento support in Open Wayback, various Web archives now natively support Memento. There is an extension available for MediaWiki to enable Memento support in it. Herbert described the Robust Links (Hiberlink) and how it can be used to avoid reference rot. He said that their service usage is growing, hence they upgraded the infrastructure and now using Amazon cloud for hosing services. He noted that going forward everyone will be able to participate by running Memento service instances in a distributed manner to provision load-balancing. He also demonstrated Ilya's work of constructing composite mementos from various sources to minimize the temporal inconsistencies while visualizing the sources of mementos.

Daniel Gomes from the Portuguese Web Archive talked about "

Web Archive Information Retrieval". He started classifying web archive information needs in three categories including Navigational, Informational, and Transactional. He noted that the usual way of accessing archive is URL searching which might not be known to the users. An alternate method is full-text search that poses the challenge of relevance. Daniel described various relevance models in great detail and how to select features to maximize the relevance. He announced that all

the dataset and code is available for free and under open source license. The code is hosted on Google Code, but due to their

announcement of sunsetting the service the code will be migrated to GitHub soon.

After this talk, there was a short break followed by the announcement that remaining sessions of the day will have two parallel tracks. It was a hard decision to choose one track or the other, but I can watch the missed sessions latter when the video recordings are made available. Later the parallel sessions were interfering each other so the microphone was turned off.

After the break Ilya Kreymer gave a live demo of his recent work "Web Archiving for all: Building WebRecorder.io". He acknowledged the collaboration with Rhizome and announced the availability of invite only beta implementation of the WebRecorder. He demonstrated how WebRecorder can be used perform personal archiving in What You See Is What You Archive (WYSIWYA) mode.

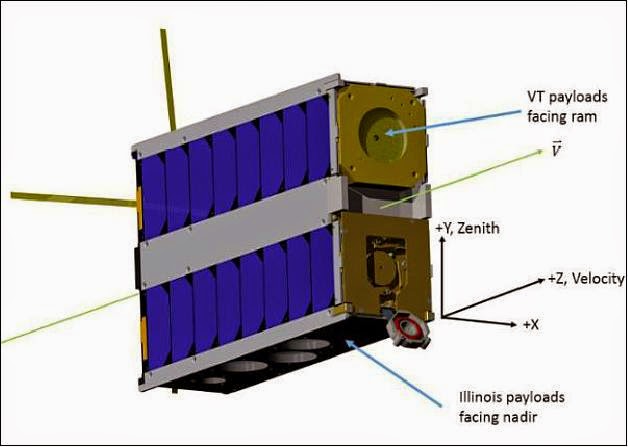

Zhiwu Xie from VirginiaTech presented "Archiving transactions towards an uninterruptible web service". He described an indirection layer between the web application server and the client that archives each successful response and when server returns 4xx/5xx failure responses, it serves the most recent copy of the resource from the transactional archive. It is similar to services like CloudFlare in functionality from clients' perspective, but it has added advantage of building a transactional archive for website owners. Zhiwu demonstrated the implementation by reloading two web pages multiple times of which one was utilizing the UWS and the other was directly connected to the web application server that was returning the current timestamp with random failures. He mentioned that the system is not ready for the prime time yet.

During the lunch break I was with Andy, Kristinn, and Roger where we had free style conversation on advanced crawlers, CDX indexer memory error issues, the possibility of implementing CDX indexer in Go, separating data and view layers in Wayback for easy customization, some YouTube videos such as "Is Your Red The Same as My Red?", hilarious "If Google was a Guy", Ted talks such as "Can we create new senses for humans?", "Evacuated Tube Transport Technologies (ET3)", and the possible weather of Iceland around the time IIPC GA 2016 is scheduled.

Jefferson Bailey presented his talk on "Web Archives as research datasets". With various examples and illustrations from Archive-It collections he established the point that web archives are great sources of data for various researches. He acknowledged that WAT is a compact and easily parsable metadata file format that is about 18% of the WARC data files.

Ian Milligan from the University of Waterloo presented his talk on "WARCs, WATs, and wgets: Opportunity and Challenge for a Historian Amongst Three Types of Web Archives". He described the importance of web archives and why historians should use web archives. His talk was primarily based on three case studies including Wide Web Scrape, GeoCities End-of-Life Torrent, and Archive-It Longitudinal Collections, Canadian Political Parties & Labour Organizations. I enjoyed his style of storytelling, some mesmerizing visualizations, and in particular the GeoCities case study. He noted that the GeoCities data was not the form of WARC files, instead it was regular Wget crawl.

After a short break Ahmed AlSum from the Stanford University Library (and a WS-DLalumnus) presented his work on "Restoring the oldest U.S. website". He described how he turned yearly backup files of SLAC website from 1992 to 1999 into WARC and CDX files with the help of Wget and by applying some manual changes to mimic the effect as if it was captured in those early days. These transforms were necessary to allow modern Open Wayback system to correctly replay it. Ahmed briefly handed the microphone over to Joan Winters who was responsible to take backups of the website in early days and she described how they did it. Ahmed also mentioned that the Wayback codebase had hardcoded 1996 as the earliest year that was fixed by making it configurable.

As an after thought I would love to see this effort combined with Satya's Olive Archive so that from the server stack to the browser experience all can be replicated as close to the original environment as possible.

Federico Nanni from the University of Bologana presented "Reconstructing a lost website". Looking at the schedule, my first impression was that it is going to be a talk about tools to restore any lost websites and reconstruct all the pages and links with the help of archives. I was wondering if they are aware of Warrick, a tool that was developed at Old Dominion University with this very objective. But, it turned out to be a case study of the world's oldest university established around 1088. One of the many challenges in reconstructing the university website he mentioned was the exclusion of the site from the Wayback Machine for unknown reasons which they tried to resolve together with Internet Archive. Amusingly, one of the many sources of collecting snapshots includes a clone of the site prepared by student protesters.

Last speaker of the second day Michael L. Nelson from Old Dominion University presented the work of his student Scott G. Ainsworth"Evaluating the temporal coherence of archived pages". With an example of Weather Underground site he demonstrated how unrealistic pages can be constructed by archives due to the temporal violations. He acknowledged that among various categories of temporal violations, there are at least 5% cases where there exists a provable temporal violation. He also noted that temporal violation is not always a concern.

Day 3

The third day sessions were in the Internet Archive building, San Francisco instead of the usual Li Ka Shing Center at Stanford University, Palo Alto. A couple of buses transported us to the IA and we enjoyed the bus trip in the valley as the weather was very good. IA staff was very humble and welcoming. The emulator of classical games installed in the lobby of IA turned out to be the prime center of attraction. We came to know some interesting facts about the IA such as the building was a church which was acquired because of its similarity with the IA logo and the pillows in the hall were contributed by various websites with the domain name and logo printed on them.

Sessions before lunch were mainly related to consortium management and logistics these include Welcome to the Internet Archive by Brewster Kahle, Chair address by Paul Wagner, Communication report by Jason Webber, Treasurer report by Peter Stirling, and Consortium renewal by the chair followed by break-out discussions to gather ideas and opinion from the IIPC members on various topics. Also, the date and venue for the next general assembly was announced to be on April 11, 2016 in Reykjavik, Iceland.

After the lunch break, your author, Sawood Alam from Old Dominion University presented the progress report on "Profiling web archives" project, funded by IIPC. With the help of some examples and scenarios he established the point that the long tail of archive matters. He acknowledged the growing number of Memento compliant archives and the growth of use of Memento aggregator service. In order for the Memento aggregator to perform efficiently, it needs query routing support apart from caching which only helps when the requests are repeated before cache expires. Then he acknowledged two earlier profiling efforts one being a complete knowledge profile by Sanderson and the other minimalistic TLD only profile by AlSum. He described the limitations of the two profiles and explored the middle ground for various other possibilities. He evaluated his findings and concluded that his work so far gained up to 22% routing precision with less than 5% cost relative to the complete knowledge profile without any false negatives. Sawood also announced the availability of the code to generate profiles and benchmark them in a GitHub repository. In a later wrap-up session the chair Paul Wagner referred to Sawood's motivation slide in his own words, "sometimes good enough is not good enough."

In the break various IA staff members gave us tour of the IA facility including book scanners, television archive, an ATM, storage rack, music and video archive where they convert data from old recording media such as vinyl discs and cassettes.

After the break a historian and writer Abby Smith Rumsey talked about "The Future of Memory in the Digital Age". Her talk was full of insightful and quotable statements. I will quote one of my favorite and will leave the rest in the form of tweets. Se says, "ask not what we can afford to save; ask what we can afford to lose".

Finally the founder of the Internet Archive, Brewster Kahle took the stage and talked about digital archiving and the role of IA in the form of various initiatives including book archive, music archive, and TV archive to name a few. He described the zero-sum book lending model utilized by the Open Library for the books that are not free for unlimited distribution. He invited all the archivists to create a common collective distributed library where people can share their resources such as computing power, storage, man power, expertise, and connections. During the QA session I asked when he thinks about collaboration, is he envisioning a model similar to the inter-library loan where peer libraries will refer to the other places in the form of external links if they don't have the resources but others do or in contrast they will copy the resources of each other? He responded, "both."

The chair gave a wrap-up talk and formally ended the third day session. Buses still had some time before they leave, so people were engaged in conversation, games and photographs while enjoying drinks and food. I particularly enjoyed a local ice cream named "It's-It" recommended by an IA staff.

Day 4

On fourth day Sara Aubry presented her talk on "Harvesting Digital Newspapers Behind Paywalls" in Berge Hall A where Harvesting Working Group was gathered while IIPC's communication strategy session was going on in Hall B. She discussed her experience of working with news publishers to make their content more crawler friendly. Some of the crawling and replay challenges include paywalls requiring authentication to grant access to the content and inclusion of the daily changing date string in the seed URIs. They modified the Wayback to fulfill their needs, but the modifications are not committed back to the upstream repository. She said, if it is useful for the community then the changes can be pushed out in the main repository.

Roger Coram presented his talk on "Supplementing Crawls with PhantomJS". I found his talk quite relevant to one of my colleague Justin Brunelle's work. This is a necessary step to improve the quality of the crawls especially when sites are becoming more interactive with extensive use of JavaScript. For some pages, he is using CSS selectors and takes screen shots to later complement the rendering.

Kristinn Sigurðsson engaged everyone to talk about the "Future of Heritrix". He started with the question, "is Heritrix dead?" and I said to myself, "can we afford this?". This ignited the talk about what can be done to increase the activity on its development. I asked the question, what is slowing down the development of Heritrix, is it out of ideas and new feature requests or there are not enough contributors to continue the development? There was no clear answer to this question, but it helped continuing the discussion. I also suggested that if new developers are afraid of making changes that will break the system and will discourage upgrades then can we introduce plug-in architecture where new features can be added as optional add-ons.

Helen Hockx-Yu took the microphone and talked about the Open Wayback development. She gave brief introduction of the development workflow and periodic telecon. She also talked about the short and long term development goals including better customization and internationalization support, display more metadata, ways to minimize the live leaks, and acknowledge/visualize the temporal coherence.

After a short break Tom Cramer gave his talk on "APIs and Collaborative Software Development for Digital Libraries". He formally categorized the software development models in five categories. He suggested IIPC to take the position to unify the high level API for each category of the archiving tools so that they can co-operate interchangeably. This was very appealing to me because I was thinking on the same lines and have done some architectural design of an orchestration system that achieves the same goal via a layer of indirection.

Daniel Vargas from LOCKSS presented his talk on "Streamlining deployment of web archiving tools" and demonstrated usage of Docker containers for deployment. He also demonstrated the use of plain WARC files on regular file system and in HDFS with Hadoop clusters. I was glad to see someone else deplying Wayback machine in containers as I was pushing some changes to the Open Wayback repository that will make containerization of Wayback easier.

During the lunch break Hunter Stern from IA approached me and told me about the Umbra project to supplement the crawling of JS-rich pages. After the lunch there was a short open mic session where every speaker has got four minutes to introduce exciting stuff that they are working on. Unfortunately, due to the shortage of time I could not participate in it.

After the lunch break Access Working Group gathered to talk about "Data mining and WAT files: format, tools and use cases". Peter Stirling, Sara Aubry, Vinay Goel, and Andy Jackson gave talks on "Using WAT at the BnF to map the First World War", "The WAT format and tools for creating WAT files", and "Use cases at Internet Archive and the British Library". Vinay has got some really neat and interactive visualizations based on the WAT files. I talked to Vinay during the break and we had some interesting ideas to work on such as building a content store indexed by hashes while using WAT files in conjunction to replay and a WebSocket based BOINC implementation in JavaScript to perform Hadoop style distributed research operations on IA data on users' machine.

After a short break Access Working Group talked about "Full-text search for web archives and Solr". Anshum Gupta, Andy Jackson, and Alex Thurman presented "Apache Solr: 5.0 and beyond", "Full-text search for web archives at the British Library", and "Solr-based full-text search in Columbia's Human Rights Web Archive" respectively. Anshum's talk was on technical aspects of Solr while the other two talks were more towards a case study.

Day 5

On the last day of the conference Collection Development and Preservation Working Groups were discussing their current state and plans in separate parallel tracks. Before the break I attended Collection Development Working Group. They demonstrated Archive-It account functionality. I expressed the need of a web based API to interact with the Archive-It service. I gave the example of a project I was working on a few years ago in which a feed reader periodically reads from news feeds and sends it to a disaster classifier that Yasmin AlNoamany and Sawood Alam (me) built. If the classifier classifies the news article to be in disaster category, we wanted to archive that page immediately. Unfortunately, Archive-It did not provide a way to programmatically do that (unless we use page scraping or some headless browser), so we ended up using WebCite service for that.

After the break I moved to the Preservation Working Group track where I had a talk scheduled. David S.H. Rosenthal presented his talk on "LOCKSS: Collaborative Distributed Web Archiving For Libraries". He described the working of LOCKSS and how it benefited the publishing industry. He described how Crawljax is used in LOCKSS to capture content that are loaded via Ajax. He also noted that most of the publishing sites try not to rely on Ajax and if they do, they provide some other means to crawl their content to maintain the search engine ranking.

Sawood Alam (me) happened to be the last presenter of the conference where he presented his talk on "Archive Profile Serialization". This talk was in continuation with his earlier talk at IA. He described what should be kept in profiles and how should it be organized. He also talked briefly about the implications of each data organization strategy. Finally he talked about the file format to be used and how it can affect the usefulness of the profiles. He noted that XML, JSON, and YAML like single node file formats are not suitable for profiles and he proposed an alternative format that is a fusion of CDX and JSON formats. Kristinn provided his feedback that it seems the right approach of serialization of such data, but he strongly suggested to name the file format something other than CDXJSON.

While we were having lunch, the chair took the opportunity to wrap-up the day and the conference. And now I would like to thank all the organizing team members especially Jason Webber, Sabine Hartmann, Nicholas Taylor, and Ahmed AlSum for organizing and making the event possible.

In the afternoon Ahmed AlSum took me to the Computer History Museum where Marc Weber gave us a tour. It was a great place to visit after such an intense week.

Missed Talks

Due to the parallel tracks I missed some sessions that I wanted to attend such as "SoLoGlo - an archiving and analysis service" by Martin Klein, "Web archive content analysis" by Mohammed Farag, "Identifying national parts of the internet" by Eld Zierau, "Warcbase: Building a scalable platform on HBase and Hadoop" by Jimmy Lin, "WARCrefs for deduplicating web archives" by Youssef Eldakar, and "WARC Standard Revision Workshop" by Clément Oury to name a few. I hope the videos recordings will be available soon. Meanwhile I was following the related tweets.

Conclusions

IIPC GA 2015 was a fantastic event. I had great time, met a lot of new people and some of those whom I knew on the Web, shared my ideas and learned from others. It was the most amazing one complete week I ever had. I appreciate the efforts of everyone who made this possible including organizers, presenters, and attendees.

Resources

Please let us know the links of various resources related to IIPC GA 2015 to include below.

Official

Aggregations

Blog Posts

--

Sawood Alam

'); $('#tweet-toggler').click(function() { $('[id^=twitter-widget]').toggle(); }); });

Docker containers are defined in a Dockerfile, a standard format that references the basis OS and any packages required for the container. For the students' deployment, I used Ubuntu as the basis along with Apache, PHP, and MySQL in the

Docker containers are defined in a Dockerfile, a standard format that references the basis OS and any packages required for the container. For the students' deployment, I used Ubuntu as the basis along with Apache, PHP, and MySQL in the

To facilitate the crowd sourcing of scraping algorithms, he has created a system where users can supply "recipes" to extract content from the courts' sites as they are posted. "Everything I work with is in the public domain. If anyone says otherwise, I will fight them about it.", he mentioned regarding the demands people have brought to him when finding their name in the now accessible court documents. "We still find courts using WordPerfect. They can cling to old technology like no one else."

To facilitate the crowd sourcing of scraping algorithms, he has created a system where users can supply "recipes" to extract content from the courts' sites as they are posted. "Everything I work with is in the public domain. If anyone says otherwise, I will fight them about it.", he mentioned regarding the demands people have brought to him when finding their name in the now accessible court documents. "We still find courts using WordPerfect. They can cling to old technology like no one else."

In the same session,

In the same session,  Following Lily in the session,

Following Lily in the session,

With the completion of the Lightning Talks,

With the completion of the Lightning Talks,

WS-DL's own

WS-DL's own

The presentations were broken up into four topical categories. In the first section, "User's Relevance in Search", Sally Jo Cunningham introduced the two upcoming speakers.

The presentations were broken up into four topical categories. In the first section, "User's Relevance in Search", Sally Jo Cunningham introduced the two upcoming speakers.  After Sampath,

After Sampath,  After a short coffee break,

After a short coffee break,

After resuming sessions after the lunch break,

After resuming sessions after the lunch break,

Following Faith,

Following Faith,  The first presentation in this session was from

The first presentation in this session was from  The final group presentation of the day was to come from

The final group presentation of the day was to come from  Following the attempts to allow Mumini to present remotely, the consortium broke up into group of four (two students and two doctors) for private consultations. The doctors in my group (Drs.

Following the attempts to allow Mumini to present remotely, the consortium broke up into group of four (two students and two doctors) for private consultations. The doctors in my group (Drs.