Amid the smell of coffee and bagels, the crowd quieted down to listen to the opening by

Jennifer Baek, who, in addition to getting us energized, also paused to recognize

Ardrianne Wadewitz and

Cythia Sheley-Nelson, two Wikipedians who had, after contributing greatly to the Wikimedia movement, had recently passed. The mood became more uplifting as

Sumana Harihareswara began her keynote, discussing the wonders of contributing knowledge and her experience with the

Ada Initiative,

Geek Feminism, and

Hacker School. She detailed how the Wikimedia culture can learn from the experiences at Hacker School, discussing

different methods of learning, and how these methods allow all of us to nurture learning in a group. She went on to discuss the difference between liberty and hospitality, and the importance of both to community, detailing how the group must ensure that individuals do not feel marginalized due to their gender or ethnicity, but also detailing how good hospitality engenders contribution as well as learning. Thus began

WikiConference USA 2014 at 9:30 am, on May 30, 2014.

At 10:30 am, I attended a session on the Global Economic Map by

Alex Peek. The

Global Economic Map is a

Wikidata project whose goal is to make economic data available to all in a consistent and easy to access format. It pulls in sources of data from the World Bank, UN Statistics, U.S. Census, the Open Knowledge Foundation, and more. It will use a bot to incorporate all of this data into a single location for processing. They're looking for community engagement to assist in the work.

At 10:50 am,

Katie Filbert detailed what Wikidata is and what the community can do with it, which consists mainly of bots collecting information from many sources and consolidating them into a usable format using MediaWiki. The data is stored and accessible with XML, and is also multi-lingual. This data is also fed back into the other Wikimedia projects, like Wikipedia, for use in infoboxes. They are incorporating Lua into the mix in order to allow the infoboxes to be more intelligent about what data they are displaying. They will be developing a query interface for Wikidata so information can be more readily retrieved from their datastore.

At 11:24 am,

Max Klein showed us how to answer big questions with Wikidata. In addition to the possibilities expressed in previous talks, Wikidata aims to provide modeling of all Wikipedias, allowing further analysis and comparison between each Wikipedia. He showed visual representations of the gender bias of each Wikipedia, how much each language writes about other languages, and a map of the connection of data within Wikipedia by geolocation. He showed us the exciting Wikidata Toolkit that allows for translation from XML to RDF and other formats as well as simplifying queries. The toolkit uses Java to generate JSON, which can be processed by Python for analysis.

At noon,

Frances Hocutt gave a presentation on how to use the

MediaWiki API to get data out of Wikipedia. She expounded upon the ability to extract specific structured data, such as links, from given Wikipedia pages. She mentioned that the data can be directly accessed in XML or JSON, but there is also the

mwclient Python library which may be easier to use. Afterwards, she led a workshop on using the API, guiding us through the

API sandbox and the API documentation.

Our lunch was provided by the conference and our lunch keynote was given by

DC Vito. He is a founding member of the

Learning About Multimedia Project (LAMP), an organization that educates people about the effects of media in their lives He wanted to highlight their

LAMPlatoon effort and specifically their Media Breaker tool, which allows users to take existing commercials and other media, and edit them in a legal way to interject critical thinking and commentary. They are working on a deal with the Wikimedia foundation to crowdsource the legal review of the uploaded media so that his organization can avoid lawsuits.

At 3:15 pm,

Mathias Klang gave a presentation concerning the attribution of images on Wikipedia and how Wikipedia deals with the copyright of images. He highlighted how though images are important, the interesting part is often the caption and also the metadata. He mentioned how sharing came first on the web, but it is only recently that the law has begun to catch up with such easy-to-use licenses as

Creative Commons. His organization,

Commons Machinery, is working to return that metadata, such as the license of the image, back to the image itself. He reveal

Elogio, a browser plugin that allows one to gather and store resources from around the web while storing their legal metadata for later use. Then he mentioned that Wikimedia does not store the attribution metadata in a way that Elogio and other tools can find it. One of the audience members indicated that Wikimedia is actively interested in this.

At 4:15 pm,

Timothy A. Thompson and

Mairelys Lemus-Rojas gave a presentation on the

Remixing Archival Metadata Project (RAMP) Editor, which is a browser-based tool that uses traditional library finding aids to create individual and organization authority pages for creators of archival collections. Under the hood, it takes in a traditional finding aid as a

Encoded Archival Context-Corporate Bodies, Persons, and Families (EAC-CPF) record. Then it processes this file, pulling in relevant data from other external sources, such as

Encoded Archival Description (EAD) files and

OCLC. Then it transforms these EAC-CPF records into wiki markup, allowing for direct publication to English Wikipedia via the Wikipedia API. The goal is to improve Wikipedia's existing authority records for individuals and organizations with data from other sources.

At 5:45 pm, Jennifer Baek presented her closing remarks, mentioning the conference reception on Saturday at 6:00 pm. Thus we closed out the first day and socialized with Sumana and several other Wikimedians for the next hour.

At 8:30 am on Saturday, our next morning keynote was given by

Phoebe Ayers, who wanted to discuss the state of the Wikimedia movement and several community projects. She detailed the growth of Wikipedia, even in the last few years, while also expressed concern over the dip in editing on English Wikipedia in recent years, echoing the concerns of

a recent paper picked up by the popular press. She showed how there are many classes being taught on Wikipedia right now. She highlighted many of the current projects being worked on by the Wikimedia community, briefly focusing on the

Wikidata Game as a way to encourage data contribution via gamification. She mentioned what the Wikimedia Foundation has been focusing on a new

Visual editor and other initiatives to support their editors, including grantmaking. She closed with big questions that face the Wikimedia community, such as promoting growth, providing access for all, and fighting government and corporate censorship. And our second day of fun had started.

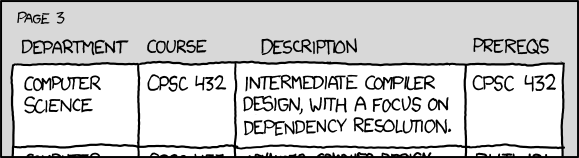

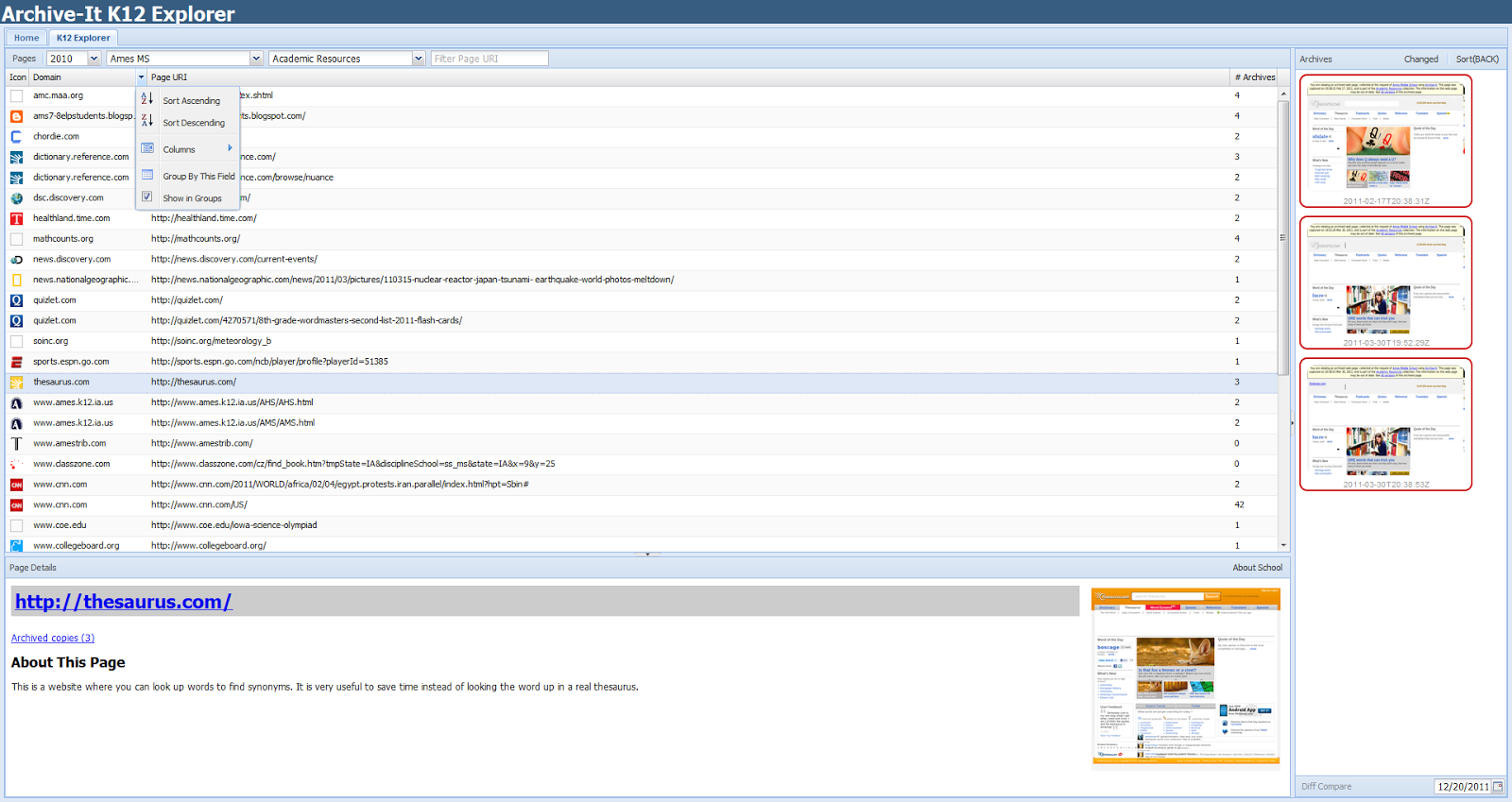

At 10:15 am, I began my first talk.

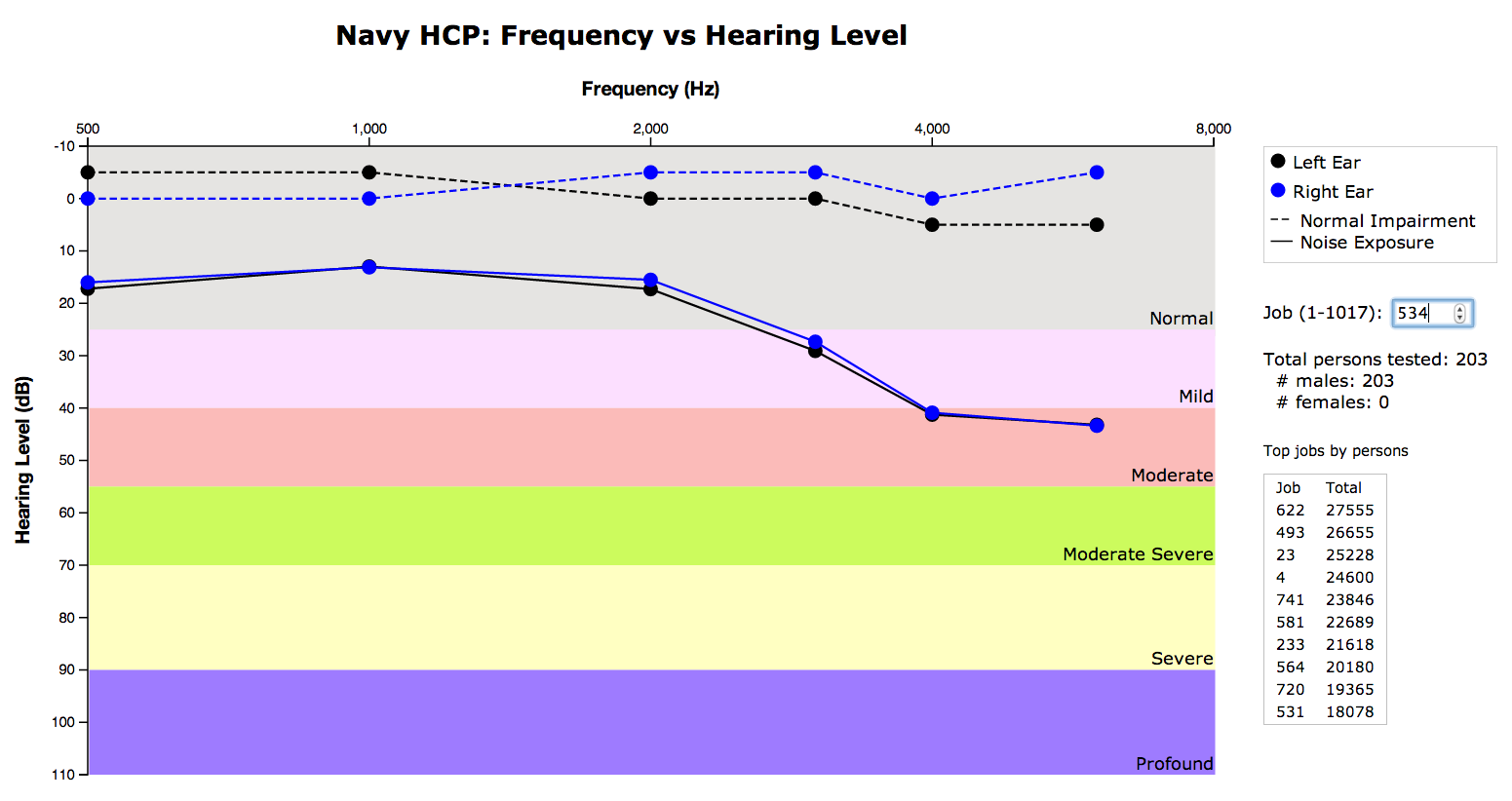

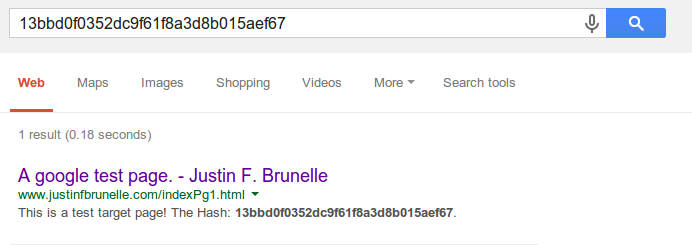

In my talk,

Reconstructing the past with MediaWiki, I detailed our attempts and successes in bringing temporal coherence to MediaWiki using the

Memento MediaWiki Extension. I partitioned the problem into the individual resources needed to faithfully reproduce the past revision of a web page. I covered old HTML, images, CSS, and JavaScript and how MediaWiki should be able to achieve temporal coherence because all of these resources are present in MediaWiki.

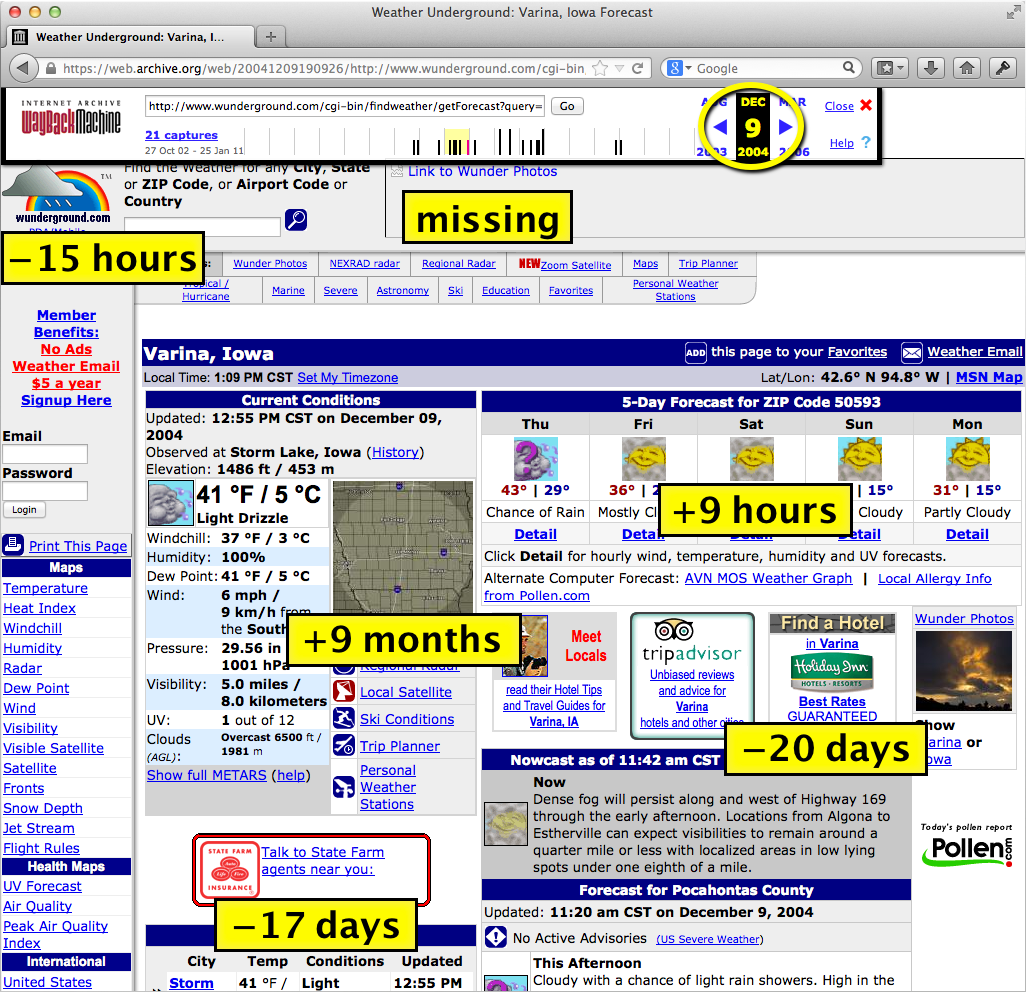

My second talk,

Using the Memento MediaWiki Extension to Avoid Spoilers, detailed a specific use case of the Memento MediaWiki Extension. I showed how we could avoid spoilers by using Memento. This generated a lot of interest from the crowd. Some wanted to know when it would be implemented. Others indicated that there were past efforts to implement spoiler notices in Wikipedia but they were never embraced by the Wikipedia development team.

Using the Memento MediaWiki Extension to Avoid SpoilersAt noon,

Isarra Yos gave a presentation on vector graphics, detailing the importance of their use on Wikipedia. She mentioned how Wikimedia uses librsvg for rendering SVG images, but Inkscape gets better results. She has been unable to convince Wikimedia to change because of performance and security considerations. She also detailed the issues in rendering complex images with vector graphics, and why bitmaps are used instead. Using Inkscape, she showed how to convert bitmaps into vector images.

At 12:30 pm,

Jon Liechty gave a presentation on languages in

Wikimedia Commons. He indicated that half of Wikimedia uses the English language template, but the rest of the languages fall off logarithmically. He is concerned about the "exponential hole" separating the languages on each side of the curve. He has reached out to different language communities to introduce Commons to them in order to get more participation from those groups. He also indicated that some teachers are using Wikimedia Commons in their foreign language courses.

After lunch,

Christie Koehler, a community builder from

Mozilla, gave a presentation on encouraging community building in Wikipedia. She indicated that community builders are not merely specialized people, but all of us are, by virtue of working together, are community builders. She has been instrumental in growing

Open Source Bridge, an event that brings together discussions on all kinds of open source projects, both for technical and

maker communities. According to her, a community provides access to experienced people you can learn from, and also provides experienced people the ability to deepen skills by letting them share their knowledge in new ways. She detailed how it is important for a community to be accessible socially and logistically, otherwise the community will not be as successful. She highlighted how a community must also preserve and share knowledge for present and future methods. She mentioned that some resources in a community may be essential, but may also be invisible until they are no longer available, so it is important to value those who maintain these resources. She also mentioned how important it is for communities to value all contributions, not just those from those who most often contribute.

At 3:15 pm,

Jason Q. Ng gave a highly attended talk on a comparison of Chinese Wikipedia with Hudong and Baidu Baike. He works on

Blocked on Weibo, which is a project showing what content Weibo blocks that is otherwise available on the web. He mentioned that censorship can originate from a government, industry, or even users. Sensitive topics flourish on Chinese Wikipedia, which creates problems for those entities that want to censor information. Hundong Baike and Baidu Baike are far more dominant than Wikipedia in China, even though they censor their content. He has analyzed articles and keywords between these three encyclopedias, using HTTP status codes, character count, number of likes, number of edits, number of deleted edits, and if an article is locked from editing, to determine if a topic is censored in some way.

At 5:45 pm,

James Hare gave closing remarks detailing the organizations that made the conference possible.

Richard Knipel, of Wikimedia NYC, told us about his organization and how they are trying to grow their Wikimedia chapter within the New York metropolitan area. James Hare returned to the podium and told us about the reception upstairs.

At 6:00 pm, we all got together on the fifth floor, got to know each other, and discussed the events of the day at the conference reception.

Sunday was the unstructured unconference. There were lightning talks and shorter discussions on digitizing books (

George Chris), video on Wikimedia and the Internet Archive (

Andrew Lih), new projects from Wikidata (Katie Filbert), contribution strategies for Wikipedia (Max Klein), low Earth micro-satellites (

Gerald Shields), the importance of free access to laws via Hebrew WikiSource (

Asaf Bartov), the MozillaWiki (Joelle Fleurantin),

ACAWiki, religion on Wikipedia, Wikimedia program evaluation and design (

Edward Galvez), Wikimedia meetups in various places,

Wikipedia in education (Flora Calvez), and Issues with Wikimedia Commons (

Jarek Tuszynski).

I spent time chatting with Sumana Harihareswara, Frances Hocutt, Katie Filbert, Brian Wolff, and others about the issues facing Wikimedia. I was impressed by the combination of legal and social challenges to Wikimedia. It helped me understand the complexity of their mission.

At the end of the Sunday, information was exchanged, goodbyes were said, lights were turned off, and we all spread back to the corners of the Earth from which we came, but within each of us was a renewed spirit to improve the community of knowledge and contribute.

-Shawn M. Jones